That selfie you posted can be used to train machines, Paige Cockburn* says it might not be a bad thing.

Did you hear the one about the person who shared a smoking-hot selfie on social media?

Did you hear the one about the person who shared a smoking-hot selfie on social media?

It got plenty of likes, but the biggest was from the tech companies who used it to train their artificial intelligence systems.

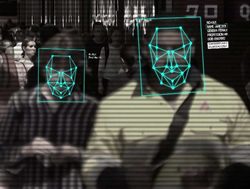

This week, consumer group CHOICE revealed retail giants Kmart, Bunnings and The Good Guys have been using facial recognition technology to capture the biometric data of consumers in some of their stores.

Bunnings told CHOICE it was used to “identify persons of interest who have previously been involved in incidents of concern in our stores”.

Kmart and The Good Guys did not respond to the ABC’s requests for comment.

As part of its investigation, CHOICE asked more than 1,000 people what they thought about this technology – 65 per cent said it was concerning and some described it as “creepy and invasive”.

You may agree, but it’s worth considering how this kind of surveillance stacks up with the decisions we make every day to give our personal data and images away willingly.

Sharing selfies on social media platforms, using a streaming service or loyalty card all divulge more personal information than the facial recognition technology CHOICE was probing.

Although Meta, the global behemoth that owns Facebook and Instagram, stopped using the tech last year, that doesn’t mean your pictures aren’t still harvested by companies who build searchable databases of faces.

That might be news to many people, and it may even prompt some to delete their accounts.

Most won’t.

“The algorithms will interpret these photos and use those results to better identify the individual captured in the surveillance image,” said Dennis B Desmond, an expert in cyberintelligence at the University of the Sunshine Coast.

And it doesn’t matter if they are high- or low-quality images.

“Bad or blurry images are also useful in training the algorithm, since surveillance imagery is not always full face, front-facing, or clear and non-pixelated,” he said.

Are the benefits misunderstood?

Dr Desmond said many people don’t understand what they can obtain in exchange for giving up a certain level of privacy.

In Osaka, Japan facial-recognition tech is used at some train stations to let people pass through turnstiles without having to get out their travel card.

Retailers rely on it to reduce shoplifting.

They can be notified if someone who has stolen from the store before enters it again.

Law enforcement agencies across Australia use it to disrupt serious and violent crime, as well as identity theft.

Dr Desmond said if people heard more about these tangible benefits, they might have a different attitude.

“People see a lot about the collection, but they rarely hear about the use of this data for interdiction or prevention,” he said.

But our feelings towards this technology, which was first conceived in the 1960s in the US, likely won’t change until there’s more transparency.

Online, people can somewhat restrict what data is stored by changing their cookie preferences.

But, short of wearing a disguise, we can’t opt in or out of facial recognition when it’s used in settings like retail.

In fact, there’s no right to personal privacy in Australia, and no dedicated laws about how data obtained through facial recognition can be used, unless you live in the ACT.

Sarah Moulds, a senior law lecturer at the University of South Australia, doesn’t believe facial recognition is inherently bad, but said three crucial elements need clearing up: consent, quality and third-party use.

If people haven’t consented to surveillance, if they can’t trust the vision is high quality and if they don’t know who the data is being shared with, they have a right to be concerned, Dr Moulds said.

High-quality imaging is of particular importance to reduce racial biases, as the technology is most accurate at identifying white men.

Because of this, Australia’s Human Rights Commission has warned against using facial-recognition images in “high-risk circumstances”.

“We should not be relying on this technology when it comes to things like proving the innocence of someone or determining someone’s refugee status or something like that,” Dr Moulds said.

Tech will keep guessing how we live

For a lot of people, it won’t really matter if their face is scanned in certain settings.

Things become more complicated if that biometric information is combined with other data, like their financial or health records.

Experts say from that combination, someone could infer things like how much you earn, what your educational level is and what you get up to on the weekend.

It could also help companies make decisions on whether to give you credit, insurance, a job.

The list goes on.

“It becomes kind of like the Minority Report … people worry companies are going to make decisions on my health care based on the fact that I’m buying chocolate every week,” Dr Desmond said.

But the reality is, this kind of data is already available through our online activities, which have been harvested and sold for years.

Notwithstanding that, Dr Desmond said lawmakers need to take a more proactive approach to regulating facial recognition.

“I think there absolutely needs to be more government regulation and control over the technologies that are employed in the commercial sector when they involve the privacy and the rights of individuals,” he said.

Dr Moulds said tougher laws are also imperative if vulnerable people — such as those without formal identification documents — are to be protected from the potential misuse of artificial intelligence.

And, ultimately, there will always be people who just want to enjoy the freedom to be forgotten when they walk out of a supermarket.

“[Because] as we have seen, neither the government nor the private sector have very good records of keeping our data safe from loss misuse,” Dr Desmond said.

*Paige Cockburn is a digital producer and reporter for the ABC in Sydney.

This article first appeared at abc.net.au