James Vincent* says a recent Facebook outage inadvertently revealed how users’ photos have become machine readable.

Image: Gerd Altmann

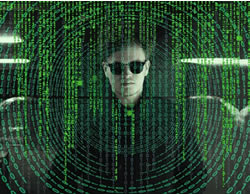

Everyone knows the bit in The Matrix when Neo achieves digital messiah status and suddenly sees “reality” for what it really is: lines of trailing green code.

Well, thanks to an outage recently affecting Facebook, users of the social network have been given a similar peek behind the digital curtain, with many images on the site replaced with the tags they’ve been assigned by the company’s machine vision systems.

So, if you browsed through your uploaded photos, instead of seeing holiday snaps or pictures of food and friends, you’d be shown text saying things like “image may contain: people smiling, people dancing, wedding and indoor” or just “image may contain: cat.”

In short: this is how your life looks to a computer.

This is how Facebook’s AI is judging you.

Do you feel ashamed before the all-seeing digital eye?!

The same image tags were showing up on Instagram, and as well as detailing general scene and object descriptions, they also suggested who was in a photo based on Facebook’s facial recognition.

(The company has been doing this for photos you’re not tagged in since 2017.)

Facebook has been using machine learning to “read” images in this way since at least April 2016, and the project is a big part of the company’s accessibility efforts.

Such tags are used to describe photos and videos to users with sight impairments.

What’s not clear is whether Facebook also uses this information to target ads.

There’s a lot of data about users’ lives that they might otherwise shield from Facebook contained in these images: whether you’ve got a pet, what your hobbies are, where you like going on holiday, or if you’re really into vintage cars, or swords, or whatever.

Back in 2017, one programmer was motivated by these questions to make a Chrome extension that revealed these tags.

As they wrote at the time: “I think a lot of internet users don’t realise the amount of information that is now routinely extracted from photographs.”

Judging by the reactions on Twitter to this latest outage, this is certainly new information to a lot of people.

We reached out to Facebook to confirm whether they use this data for ad targeting but they had not responded by publication time.

Regardless of how this information is being used, though, it’s a fascinating peek behind the scenes at one of the world’s biggest data gathering operations.

It also shows to what degree the visual world has become machine readable.

Improvements in deep learning in recent years have truly revolutionised the world of machine vision, and visual content online is often as legible to machines as text.

But once something is legible, of course, it becomes easy to store, analyse, and extract data from.

It’s only when the system breaks down, as it did earlier this month, that we realise it’s happening at all.

* James Vincent is a reporter on AI and robotics for The Verge. He tweets at @jjvincent.

This article first appeared at www.theverge.com.