Carlos Santiso* says Artificial Intelligence in the public sector can be an engine for innovation in Government — but only if we get it right.

The current debate on artificial intelligence (AI) tends to focus on its potential for the digital economy, the importance of regulation that is fit-for-purpose, and the role of Governments as regulators.

The current debate on artificial intelligence (AI) tends to focus on its potential for the digital economy, the importance of regulation that is fit-for-purpose, and the role of Governments as regulators.

Indeed, Governments play a key role in making AI work for innovation, growth and public value.

However, Governments are also prime users of AI to improve the functioning of the machinery of government and the delivery of public services.

The focus of our work in the Organisation for Economic Cooperation and Development’s (OECD’s) Public Governance Directorate is precisely on the role of Governments as direct users and service providers of AI.

We look at where Governments can and are unleashing many benefits, not just for the internal business of government, but for society as a whole. AI is, as a game changer for Governments, likely to reset these.

Leveraging AI as a tool for better policies, services and decisions requires Governments to think strategically, get the balance right between opportunities and risks, and think outside the public sector box to innovate and collaborate with new players.

Ad-hoc experiments and initiatives can be great sources of learning, but Governments need to have a strategy in place for AI that is specifically designed for and adapted to the public sector.

More than 60 countries have already created a national AI strategy. Importantly, most of these include a dedicated strategy focused on public sector transformation.

However, not all strategies are created equal. Some are more like a list of principles or a checklist of projects.

To really drive AI in the public sector, they need to be actionable, including objectives and specific actions; measurable goals; responsible actors; time frames; funding mechanisms, and monitoring instruments (a rarity in AI strategies).

Having these items in place helps to ensure sustainability and follow-through on commitments and pledges, one of the main challenges we have seen with AI in the public sector.

This, of course, will need to go in hand with strong and vocal leadership so that AI in the public sector gains traction.

This could take the form of committed leaders making it a priority, but also through new institutional configurations in charge of AI coordination.

For example, Spain created a Secretary of State for Digitalisation and Artificial Intelligence and the UAE created a Minister of State for Artificial Intelligence.

Governing AI is also about making sure that it works for society and democracy.

Getting the right balance between its risks and benefits will mean putting citizens at the centre and ensuring that underlying data is inclusive and representative of the society to avoid bias and exclusion.

It will also mean setting actionable standards that are fit-for-purpose for the public sector to implement the high-level policy principles being adopted by Governments.

The OECD’s AI Principles and the Good Practice Principles for Data Ethics in the Public Sector are two key tools to accompany Governments in such efforts.

These aspects should be built into the design of public sector strategies from the onset, and they should be leveraged to inform the decisions of leaders and practitioners alike.

While often compared to AI’s use in the private sector, Governments face a higher bar in terms of transparency and accountability.

Government adoption of AI grows rapidly, as does the public sector’s need to ensure it is being used in a responsible, trustworthy, and ethical manner.

The key to achieving this within Governments are actors in the accountability ecosystem, including ombudspersons, national auditing institutions, internal auditors within organisations and policy-making bodies that establish rules and guide Agencies that design and implement AI systems.

These different groups have had an uneasy relationship, with limited collaboration, often as part of a broader debate on the right balance between innovation and regulation.

However, emerging technologies and governance methods, as well as the increasing wealth of available data, are resulting in a re-thinking about traditional public accountability and the roles of these actors.

Within the OECD Public Governance Directorate, we believe that tremendous potential can be unlocked by forging new paths to collaboration and experimentation within the accountability ecosystem, with all actors working together to achieve the collective goal of accountable AI in the public sector.

While there are numerous areas begging for an injection of innovation in how oversight is carried out, one of the most important policy arenas Governments are seeking to achieve this through is algorithmic accountability.

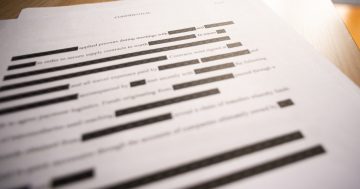

There is intense debate on how to ensure that the development and deployment of algorithms in the public sector are transparent and accountable.

Taking action in this area is crucial for ensuring the long-term viability and legitimacy of AI in the public sector.

Without this, Governments will lack citizen trust and open themselves to major risks when problems with algorithms are uncovered.

The OECD is currently exploring work on promoting collaboration among ecosystem actors in pursuit of algorithmic accountability and we are seeking collaboration opportunities with others who are interested.

*Carlos Santiso is Head of Digital, Innovative and Open Government in the OECD’s Public Governance Directorate.

A fuller version of this article was originally published on the OECD website.