Ben Dickson* says many fears about AI are overblown, but algorithmic bias could have a very real impact on employment.

Artificial intelligence (AI) might be coming for your next job, just not in the way you feared.

Artificial intelligence (AI) might be coming for your next job, just not in the way you feared.

The past few years have seen any number of articles that warn about a future where AI and automation drive humans into mass unemployment.

To a considerable extent, those threats are overblown and distant.

But a more imminent threat to jobs is that of algorithmic bias, the effect of machine learning models making decisions based on the wrong patterns in their training examples.

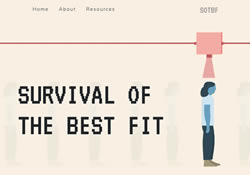

An online game developed by computer science students at New York University aims to educate the public about the effects of AI bias in hiring.

Titled Survival of the Best Fit, the browser game eloquently shows how making the wrong use of machine learning algorithms can lead to problematic hiring decisions and deprive qualified candidates from the jobs they deserve.

The game was funded through a $25,000 grant from Mozilla’s Creative Media Awards, which supports the development of games, films, and art projects aimed at raising awareness about the unintended consequences of AI.

‘Survival of the Best Fit’

You start as the CEO of a fast-growing startup company that has just raised $1 million in funding.

Your task is to hire engineers for your company.

At first you manually review the résumés of applicants and decide who to hire.

But your investors are pushing you to hire faster if you want to secure the next round of investment.

As the speed of hiring picks up, you find yourself too overwhelmed to meet the requirements of your investors.

Your engineers come up with a solution to use machine learning to automate the hiring process.

The AI algorithm reviews the CVs of previous applications you processed to find and make sense of the criteria you use to hire or reject people.

It then combines this information to with others it obtains from a large tech company such as Amazon (machine learning needs a lot of training data).

The AI then uses the gleaned patterns to “copy your hiring decisions.”

At first, using machine learning to automate the hiring process is very attractive.

You’re able to hire 10 times faster than before, to the satisfaction of your investors.

But then you start getting complaints from highly qualified people whose applications were rejected by your AI system.

As the complaints start to stack up, reporters take note.

News spreads that your company’s AI is biased against residents of a certain city.

At the end, AI bias becomes your undoing: You get sued for hiring discrimination, investors pull out, and your company shuts down.

Survival of the Best Fit is an oversimplification of algorithmic bias, but it communicates the fundamentals of how the behaviour of machine learning models becomes skewed in the wrong direction.

In between the different stages, the game displays important information that explains in layman’s terms how AI bias takes shape.

How do AI algorithms become biased?

A few reminders about AI bias are worth mentioning.

As highlighted in Survival of the Best Fit, currently, the most popular branch of AI is machine learning, a type of software that develops its behaviour based on experience rather than explicit rules.

If you provide a machine learning algorithm with 1,000 pictures of cats and 1,000 pictures of dogs, it will be able to find the common patterns that define each of those classes of images.

This is called training.

After training, when you run a new picture through the AI algorithm, it will be able to tell you with a level of confidence to which of those classes it belongs.

Here’s how things can get problematic though.

Although the AI finds common patterns among similarly labelled images, it doesn’t mean that those patterns are the right ones.

For example, a machine learning algorithm trained on images of sheep might mistakenly start classifying images of grassy landscape as “sheep,” because all its training images contained large expanses of grass, even larger than the sheep themselves.

AI bias can crop up in various fields.

In the recruiting domain, a machine learning model trained on a bunch of job applicant CVs and their outcomes (hired or not hired) might end up developing its behaviour on the wrong correlations, such as gender, race, area of residence or other characteristics.

For instance, if you train your AI on CVs of white males who live in New York, it might mistakenly think you’ve hired those people because of their gender or area of residence.

It will then start rejecting applicants with the right skills if they’re female or don’t live in New York.

Most literary work on algorithmic bias is focused on discrimination against humans.

But AI bias can happen on any correlation between training examples, such as lighting conditions, image compression algorithms, brands of devices and other parameters that have no relation to the problem the machine learning algorithm is supposed to solve.

AI bias doesn’t mean machine learning is racist

With discrimination and other ethical concerns becoming a growing focus by AI industry experts and analysts, many developers take precautions to avoid algorithmic bias where humans are concerned.

But AI bias can also manifest itself in complicated and inconspicuous ways.

For instance, developers might clean their training datasets to remove problematic parameters such as gender and race.

But during training, the machine learning model might find other correlations that hint at gender and race.

Things such as the school the applicant went to or the area they live in might contain hidden relations to their race and can cause the AI model to pick up misleading patterns.

All of this doesn’t mean AI is racist or biased in the same way that humans are.

Machine learning algorithms know nothing about jobs, gender, race, skills and discrimination.

They’re just very efficient statistical machines that can find correlations and patterns that would go unnoticed to humans.

The best description I’ve read about AI bias comes from a recent blog post from Benedict Evans: “[It] is completely false to say that ‘AI is maths, so it cannot be biased’.”

“But it is equally false to say that ML [machine learning] is ‘inherently biased’.”

“ML finds patterns in data — what patterns depends on the data, and the data is up to us, and what we do with it is up to us.”

The first step toward dealing with AI bias is to educate more people about it.

In this regard, Survival of the Best Fit does a great job.

* Ben Dickson is a software engineer and the founder of TechTalks. He tweets at @bendee983.

This article first appeared at bdtechtalks.com.