James Vincent* says researchers have created a printed patch that can make you all but invisible to AI-powered surveillance.

The rise of AI-powered surveillance is extremely worrying.

The rise of AI-powered surveillance is extremely worrying.

The ability of governments to track and identify citizens en masse could spell an end to public anonymity.

But as researchers have shown time and time again, there are ways to trick such systems.

The latest example comes from a group of engineers from the University of KU Leuven in Belgium.

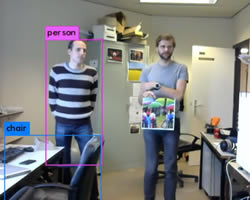

In a paper shared recently on the preprint server arXiv, these students show how simple printed patterns can fool an AI system that’s designed to recognise people in images.

If you print off one of the students’ specially designed patches and hang it around your neck, from an AI’s point of view, you may as well have slipped under an invisibility cloak.

As the researchers write: “We believe that, if we combine this technique with a sophisticated clothing simulation, we can design a T-shirt print that can make a person virtually invisible for automatic surveillance cameras.”

(They don’t mention it, but this is, famously, an important plot device in the sci-fi novel Zero History by William Gibson.)

This may seem bizarre, but it’s actually a well-known phenomenon in the AI world.

These sorts of patterns are known as adversarial examples, and they take advantage of the brittle intelligence of computer vision systems to trick them into seeing what is not there.

In the past, adversarial examples have been used to fool facial recognition systems.

(Just wear a special pair of glasses, and the AI will think you’re Milla Jovovich.)

They’ve been turned into stickers, printed on to 3D objects, and have even been used to make art that can fool algorithms.

Many researchers have warned that adversarial examples have dangerous potential.

They could be used to fool self-driving cars into reading a stop sign as a lamppost, for example, or they could trick medical AI vision systems that are designed to identify diseases.

This could be done for the purposes of medical fraud or even to intentionally cause harm.

In the case of this recent research — which we spotted via Google researcher David Ha — some caveats do apply.

Most importantly, the adversarial patch developed by the students can only fool one specific algorithm named YOLOv2.

It doesn’t work against even off-the-shelf computer vision systems developed by Google or other tech companies, and, of course, it doesn’t work if a person is looking at the image.

This means the idea of a T-shirt that makes you invisible to all surveillance systems remains in the realm of science fiction — for the moment, at least.

As AI surveillance is deployed around the world, more and more of us might crave a way to regain our anonymity.

Who knows: the hallucinogenic swirls of adversarial examples could end up being the next big fashion trend.

* James Vincent reports on AI and robotics for The Verge.

This article first appeared at www.theverge.com.