AI is transforming government work, but without careful oversight it could create serious governance challenges. Photo: Michelle Kroll.

In government, where every buck is hard earned by a taxpayer and efficiency is key, AI seems a no-brainer.

AI can parse complex documents instantly, automate routine administrative tasks and surface key insights from vast datasets to support faster, smarter government decisions: it’s the kind of efficiency booster that feels too good to ignore.

While AI has already seeped into almost every aspect of public service delivery, experts remind us that adopting AI tools in government isn’t like installing a new piece of software.

In an ideal world (as outlined in the AI Plan for the Australian Public Service 2025), every public servant would have the foundational training, capability support, and guidance needed to use AI tools safely and responsibly, supported by leadership from chief AI officers.

But Mojo Up director Daniel Buchanan says when it comes to the responsible use of AI in government, it all starts with the magic words: ongoing governance.

“AI and data security need to be monitored continuously as part of a broader governance piece,” he says.

“Some agencies don’t have the capability yet, which is one of the reasons the government is now mandating agencies have a chief AI officer.”

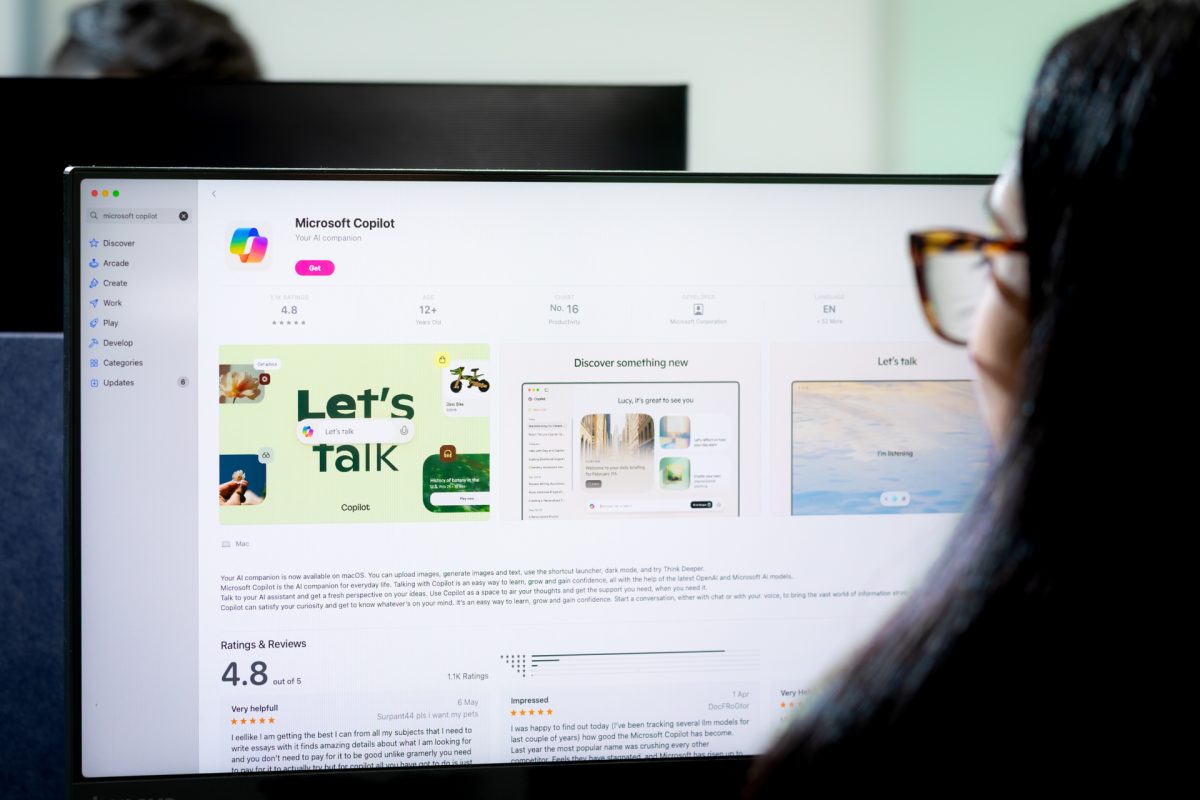

An obvious concern is that AI tools such as Copilot can use anything you have access to as a source of knowledge and can automatically surface information you have access to – whether or not you should have that access.

Setting up the right permissions is critical. When you get it wrong, the fallout can be serious.

The classic example is human resources information, such as payroll data.

Another way to limit this leakage is to limit duplication and share using good governance and document lifecycle management.

“SharePoint sites tend to accumulate over time. People get added to groups and channels, change roles, and permissions aren’t cleaned up,” Daniel says.

“In our experience over years of working with agencies, we’ve seen examples where hundreds or thousands of SharePoint sites are no longer active, but that information is still there.

“It’s a legacy trove of information, and once you throw in Copilot, it becomes part of what the tool uses to provide information.”

Mojo Up’s Daniel Buchanan says AI and data management need to be reviewed frequently as part of a broader governance piece. Photo: Michelle Kroll.

Several safeguards already exist. For example, Copilot honours labelling and information classification to increase a user’s control over sensitive information.

“You can implement a heap of automated policies and controls based on that labelling. You can effectively set boundaries for what Copilot can and can’t use,” Daniel says.

“Agencies that take that seriously do a thorough job of educating staff on correct labelling conventions and will be far better positioned to govern how Copilot functions within their environment.”

But for a platform that can access all the data in your environment, the behaviour becomes difficult to fully map or anticipate.

“For most people, the reach of Copilot, what information it could potentially surface, is a question much like ‘How long is a piece of string?'” Daniel says. “Not all government agencies have mature enough AI practices in place to take it on and to keep on top of it.”

Mojo Up has put together a basic offering to come in and help agencies get on the maturity path, bringing visibility to risks such as oversharing and risky behaviour, and providing senior management tools to make governance and risk decisions.

“It’s not about disruptive policies that interrupt workflow. It comes back to having oversight and controls in place to protect the end user.”

For more information, visit Mojo Up.

Original Article published by Dione David on Region Canberra.