Kyle Wiggers* says researchers have been able to foil people-detecting AI with an ‘adversarial’ T-shirt.

It’s a well-established fact that object and face-detecting algorithms are vulnerable to adversarial attack, as evidenced by a 2014 study conducted by researchers at Google and New York University.

It’s a well-established fact that object and face-detecting algorithms are vulnerable to adversarial attack, as evidenced by a 2014 study conducted by researchers at Google and New York University.

That’s to say the models can be deceived by specially crafted patches attached to real-world targets.

Most research in adversarial attacks involves rigid objects like glass frames, stop signs, or cardboard.

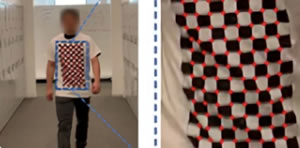

But scientists at Northwestern University and the MIT-IBM Watson AI Lab propose what they are calling an ‘adversarial’ T-shirt, one with a printed image that evades person-detectors even when it’s deformed by a wearer’s changing pose.

In a preprint paper, they claim it manages to achieve up to 79 per cent and 63 per cent success rates in digital and physical worlds, respectively, against the popular YOLOv2 model.

This is similar to a study conducted by engineers from the university of KU Leuven in Belgium earlier this year that showed how patterns printed on patches worn around the neck could be used to fool person-detecting AI.

Incidentally, the university team speculated that their technique could be combined with a clothing simulation to design such a T-shirt.

The researchers from the latest study note that a number of adversarial transformations are commonly used to fool classifiers, including scaling, translation, rotation, brightness, noise, and saturation adjustment.

But they say these are largely insufficient to model the deforming cloth caused by a moving person’s pose changes.

Instead, they employed a data interpolation and smoothing technique called Thin Plate Spline (TPS), which models coordinate transformations with affine (which preserves points, straight lines, planes) and non-affine components to provide a means of learning adversarial patterns for non-rigid objects.

The researchers’ T-shirt has a checkerboard pattern, where each intersection between two checkerboard grid regions serves as a control point to generate TPS transformation.

In a series of experiments, the team collected two digital datasets for learning and testing their proposed attack algorithm in both the physical and the digital worlds.

The training corpus contained 30 videos of a virtual moving person in a digital environment wearing the adversarial T-shirt across four different scenes, while the second contained 10 videos captured in the same setting but with different virtual people.

The third — a real-world dataset — comprised 10 test videos of a moving person wearing a physical adversarial T-shirt.

In simulation, the researchers report a 65 per cent attack success rate, and 79 per cent when attacking a person-detecting R-CNN model and YOLOv2.

In real-world tests, they say the adversarial T-shirt fools YOLOv2 and R-CNN upwards of 65 per cent of the time — at least when only a single T-shirt wearer is within view.

With two or more people, the success rate drops.

The approach likely wouldn’t fool more sophisticated object and people-detecting models from the likes of Amazon Web Services, Google Cloud Platform, and Microsoft Azure, of course, and 65 per cent is only slightly better than chance.

But the researchers assert their work is a first step toward adversarial wearables that can evade detection of moving persons.

Since T-shirts are non-rigid objects, “deformation induced by pose change of a moving person is taken into account when generating adversarial perturbations,” wrote the paper’s co-authors.

“Based on our [study], we hope to provide some implications on how the adversarial perturbations can be implemented with human clothing, accessories, paint on face, and other wearables.”

* Kyle Wiggers is a technology journalist and AI correspondent for VentureBeat. He tweets at @Kyle_L_Wiggers. His website is kylewiggers.com.

This article first appeared at venturebeat.com